SLoMO aims to bring together researchers working on the understanding of long-form, edited videos—such as movies and TV episodes. We focus on two key aspects:

-

Audio Description (AD) Generation focuses on producing concise, coherent, story-driven narrations for blind and visually impaired (BVI) audiences, complementary to the information provided by the original audio.

We host a series of invited talks addressing key open questions:

- How can story-level information be effectively perceived and utilized in downstream tasks?

- How can fair evaluation be ensured-e.g., for AD-and how can data leakage into large-scale pre-trained models be minimized?

- What are the current limitations of Audio Descriptions, and what are the next steps toward practical automatic AD generation?

-

Movie Question Answering evaluates a model's ability to comprehend narratives, particularly through story-level reasoning and long-context modeling.

To advance research in this direction, we present the Short-Films 20K (SF20K) Competition for story-level movie understanding.

The workshop will be held on the morning of 19th October, 2025, at the Honolulu Convention Center.

| Time | Session | Speaker / Details | Slides |

|---|

| 08:40 - 08:50 | Opening Remarks | | link |

| 08:50 - 09:20 | Invited Talk 1 | Prof. Makarand Tapaswi (IIIT Hyderabad) | link |

| 09:20 - 09:50 | Invited Talk 2 | Prof. Angela Yao (NUS) | link |

| 09:50 - 10:40 | SF20K Competition | Result announcements & presentations | link |

| 10:40 - 11:00 | Coffee Break | | |

| 11:00 - 11:30 | Invited Talk 3 | Prof. Amy Pavel (UC Berkeley) | |

| 11:30 - 12:00 | Invited Talk 4 | Prof. Mike Zheng Shou (NUS) | |

| 12:00 - 12:30 | Panel Discussion & Closing Remarks | | link |

-

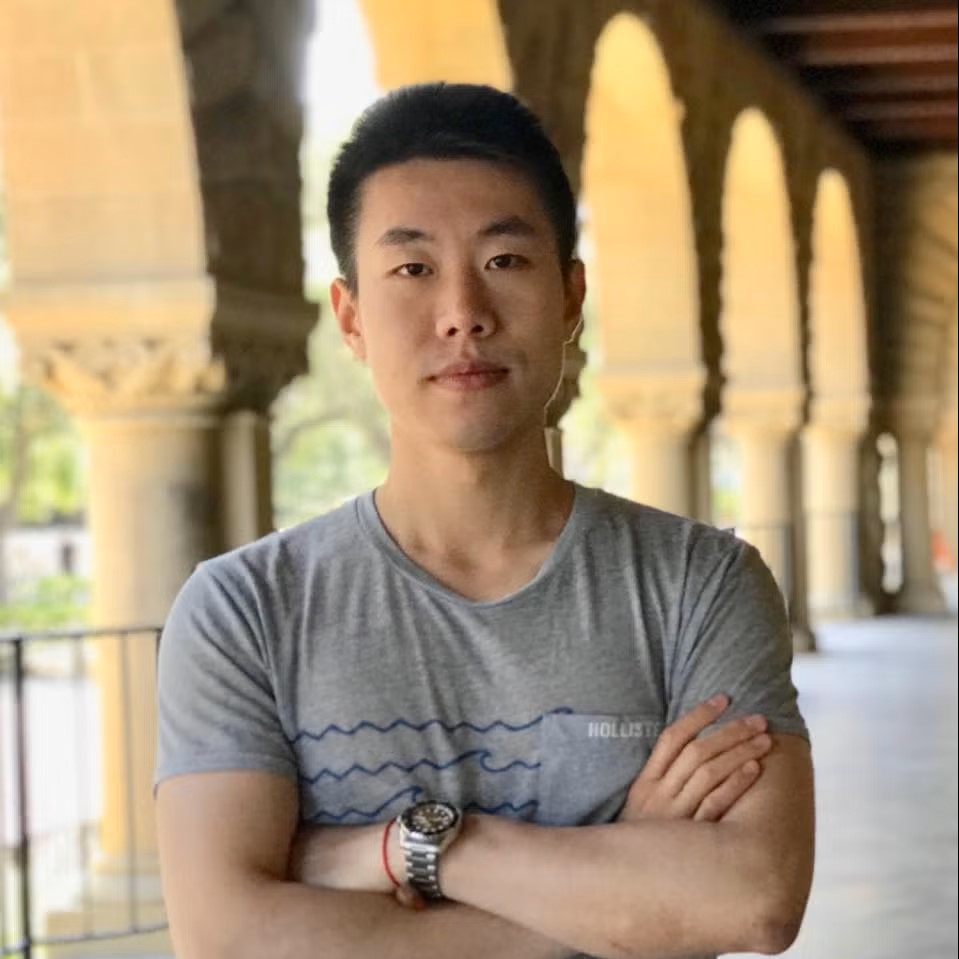

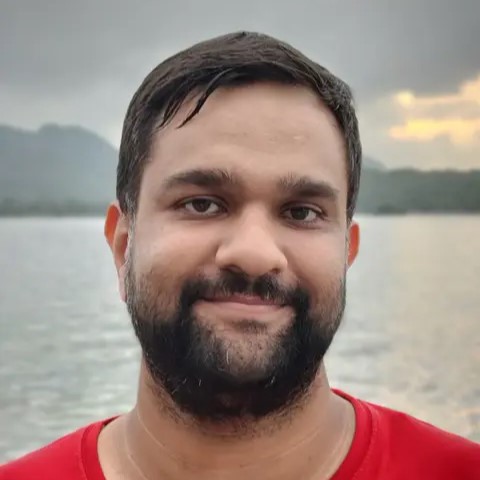

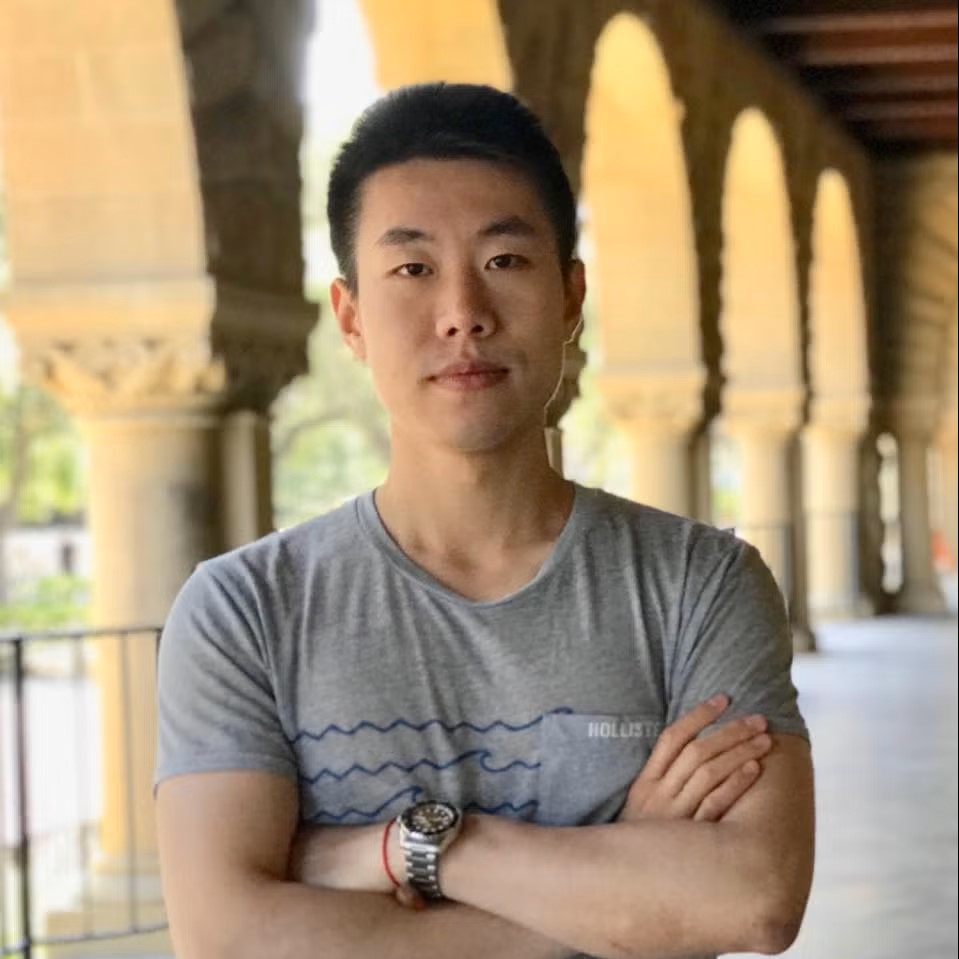

University of California, Berkeley

-

National University of Singapore

-

National University of Singapore

-

IIIT Hyderabad · Wadhwani AI

Short-Films 20K (SF20K) Competition

The Short-Films 20K (SF20K) Competition aims to advance story-level video understanding by leveraging the new SF20K dataset. While recent multimodal models have demonstrated progress in video understanding, existing benchmarks are largely limited to short videos with simple narratives. In contrast, our competition focuses on complex, long-term reasoning in storytelling by introducing multiple-choice and open-ended question answering tasks.

The competition is based on SF20K-Test-Expert, a subset of the SF20K dataset, which includes manually crafted open-ended questions. The questions are designed to be challenging, requiring long-term reasoning and multimodal understanding of the video content.

Competition Format

This edition features two tracks focused on Open-Ended Video Question Answering:

Competition Phases

The competition is divided into two phases:

- Phase 1: Public Test Set Evaluation

During this initial phase, methods are evaluated against the public test set. This phase is primarily for validation purposes, allowing participants to refine their approaches.

- Phase 2: Private Test Set Evaluation

For the private leaderboard, we will evaluate models on new, unreleased movies to prevent data contamination. This private test set will be released on October 3rd, 2025. Final rankings will be determined based on performance in this phase.

Important Dates

- 01 Jul, 2025: The competition server launches with data from the public test set

- 03 Oct, 2025: Submission deadline for leaderboard ranking on the public test set

- 03-10 Oct, 2025: Private test set evaluation

- 10 Oct, 2025: Final rankings announced on the private test set

- 19 Oct, 2025: Workshop at ICCV 2025 with winner's presentations

Final Rankings

Main Track

Main Track

Special Track

Special Track

-

University of Oxford

-

École Polytechnique, IP Paris

-

Google DeepMind

-

Google DeepMind

-

Google DeepMind

-

Ecole Polytechnique, IP Paris

-

École des Ponts ParisTech

-

Shanghai Jiao Tong University

-

École Polytechnique, IP Paris

-

MBZUAI

-

University of Oxford

MO

MO

Main Track

Main Track

Special Track

Special Track